|

Hi, I am Suny! I am joining Microsoft AI as Member of Technical Staff. Before that, I did a DPhil (PhD) at the Visual Geometry Group, Oxford, where was lucky to be advised by Prof. Andrea Vedaldi and Prof. Christian Rupprecht. During my PhD, I was a summer intern at Google DeepMind with Olivia Wiles. During my PhD I started a student research group within the AI society in Oxford, OxAI Labs , where we did research on fairness and bias. Before PhD, I graduated with an MEng in Engineering Science from the Univesity of Oxford. My nickname is to be pronounced like "Sunny" ☀️ despite missing an "n". Email / Google Scholar / LinkedIn / Github |

|

|

I'm interested in (multimodal) LLMs, vision + language, un/self-supervised computer vision, 3D, and fairness. |

|

Paul Engslter*, Aleksandar Shtedritski*, Iro Laina, Christian Rupprecht, Andrea Vedaldi, * Equal contribution. ArXiv preprint, 2025 arXiv / website / code / demo We present a training-free and optimisation-free method for generating 3D worlds. |

|

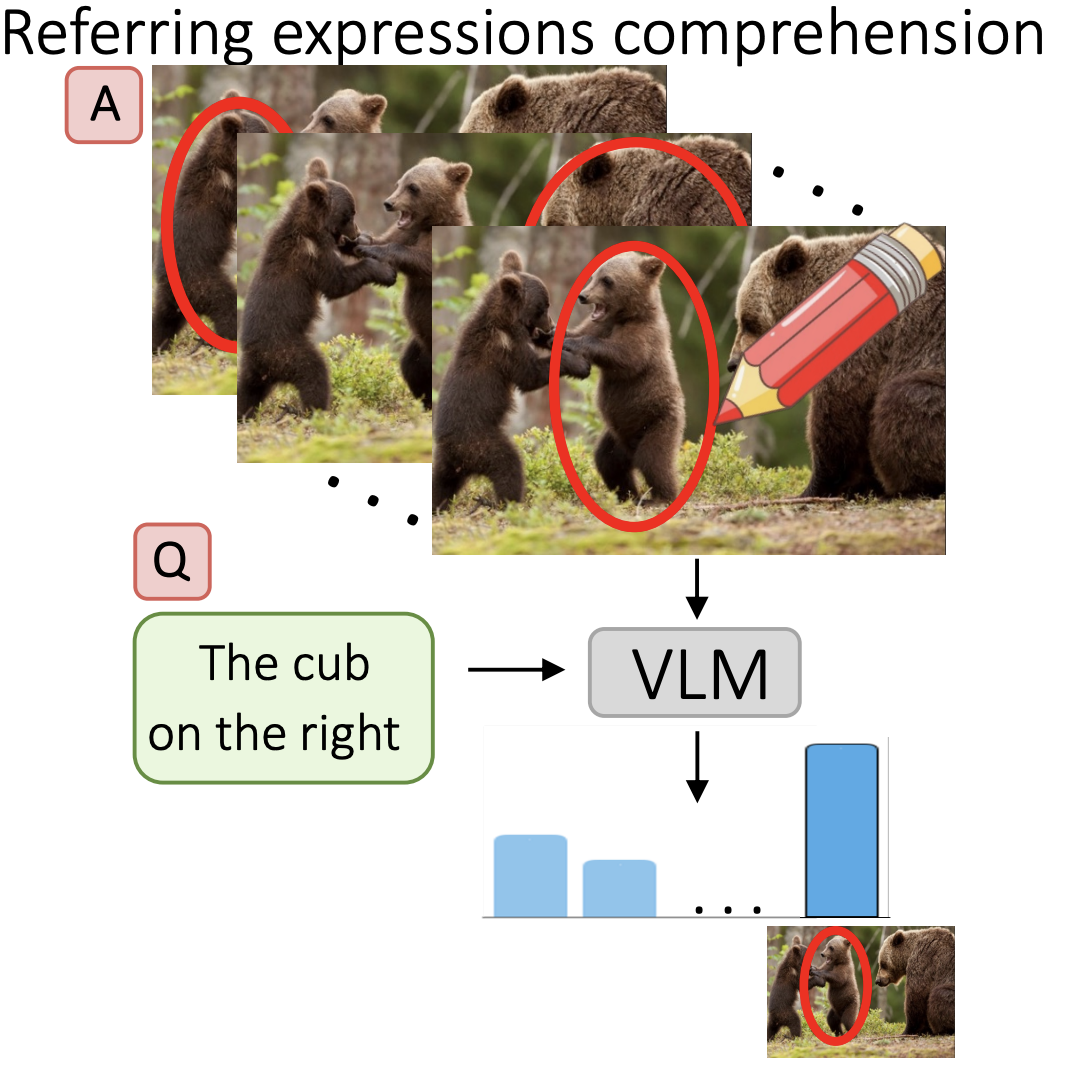

Jana Zeller Aleksandar Shtedritski, Christian Rupprecht, Coming soon website We automatically discover visual prompts for CLIP. Interestingly, they look like red circles. |

|

Aleksandar Shtedritski, Christian Rupprecht, Andrea Vedaldi ECCV, 2024 arXiv / website / code / demo We present an unsupervised method for shape-to-image correspondenes. |

|

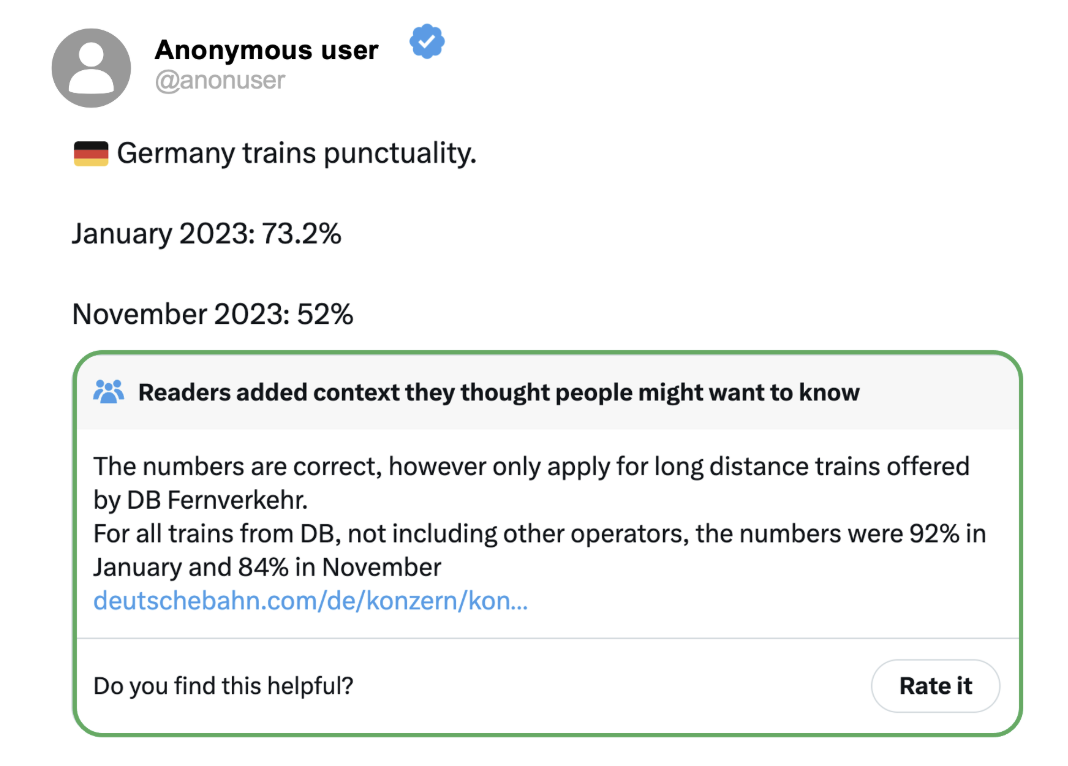

Tim Franzmeyer*, Aleksandar Shtedritski*, Samuel Albanie, Philip Torr, Joao F. Henriques, Jakob E. Foerster, ACL, 2024 arXiv / website / code A living benchmark for LLMs that addresses issues like test data contamination and benchmark overfitting. |

|

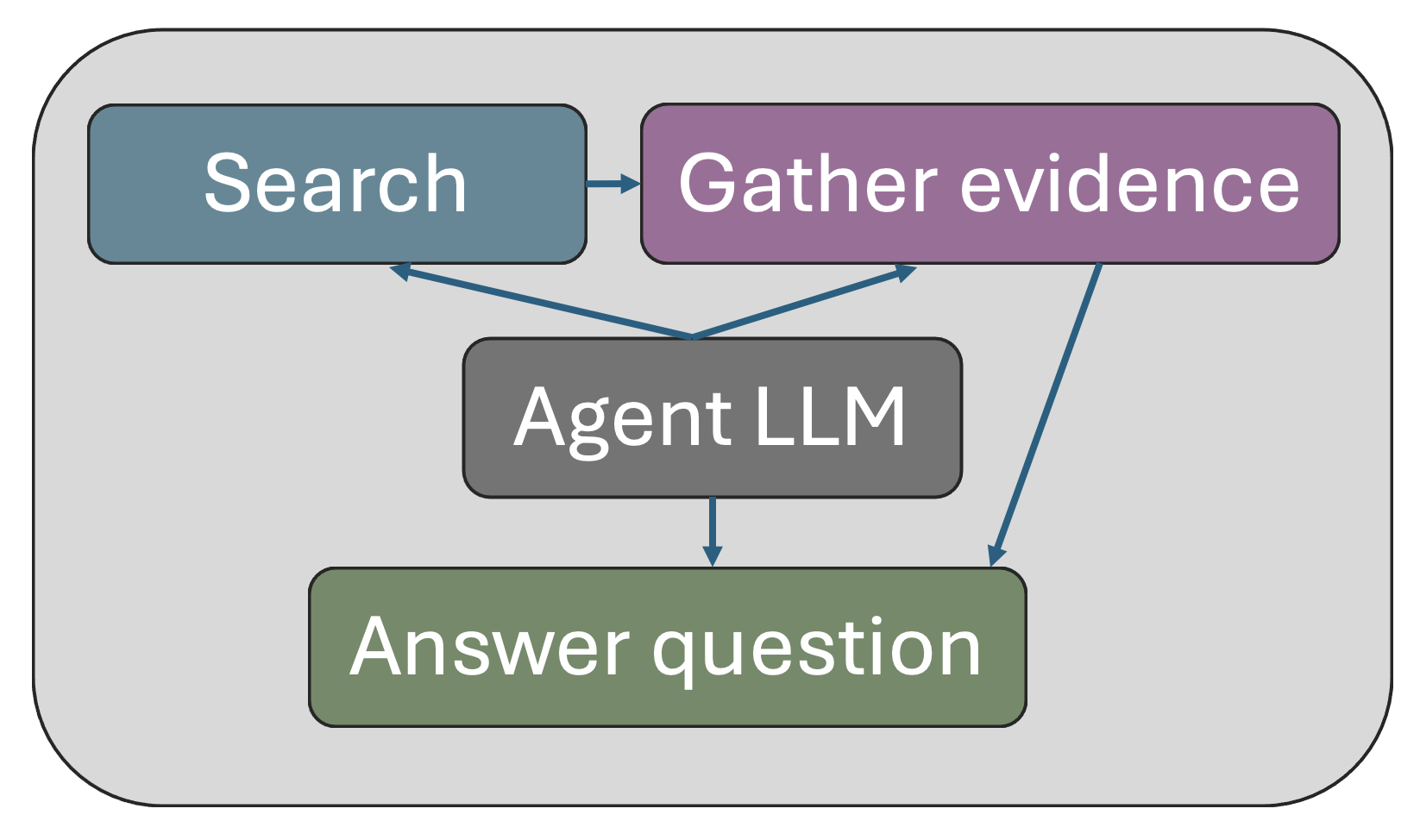

Jakub Lala, Odhran O'Donoghue, Aleksandar Shtedritski, Sam Cox, Samuel G Rodriques, Andrew White Technical report, 2023 arXiv / code We present a RAG agent for scientific research, and a benchmark for information retrieval in sciences. |

|

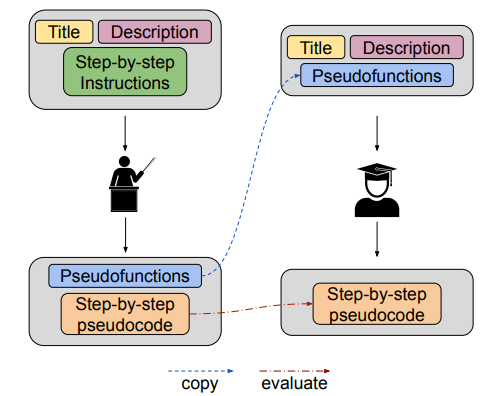

Odhran O'Donoghue, Aleksandar Shtedritski, John Ginger, Ralph Abboud, Ali Essa Ghareeb, Justin Booth, Samuel G Rodriques, EMNLP, 2023 arXiv / code A framework for evaluation of LLMs on long planning tasks, with an application in biology. |

|

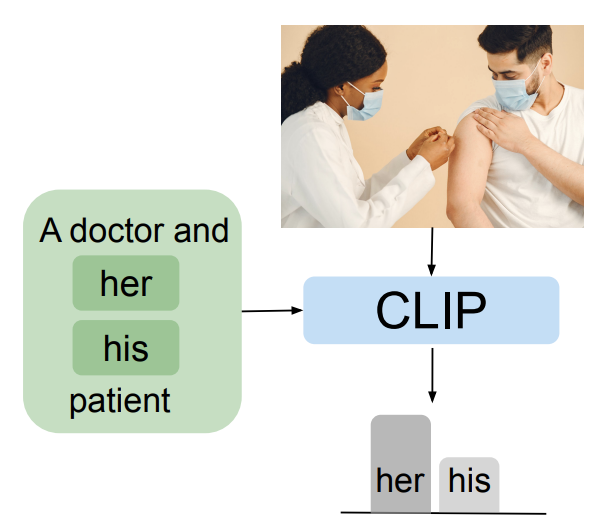

Aleksandar Shtedritski, Christian Rupprecht, Andrea Vedaldi ICCV, 2023 (Oral Presentation) arXiv / code We discover an emergent ability of CLIP, where drawing a red circle focuses the global image description to the region inside the circle. |

|

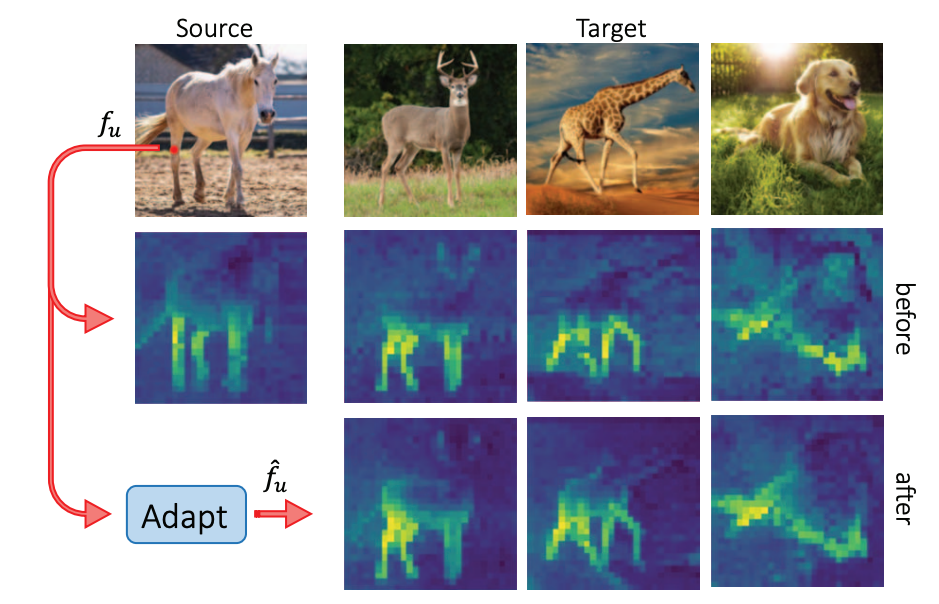

Aleksandar Shtedritski, Andrea Vedaldi, Christian Rupprecht ICCV Workshop ion Representation Learning with Limited Data, 2023 (Oral Presentation) paper / We present a method for learning robust and generalizable semantic correspondences. |

|

Siobhan Mackenzie Hall, Fernanda Gonçalves Abrantes, Hanwen Zhu, Grace Sodunke, Aleksandar Shtedritski, Hannah Rose Kirk NeurIPS Datasets and Benchmarks, 2023 arXiv / code A new dataset for benchmarking gender bias in vision-language models. |

|

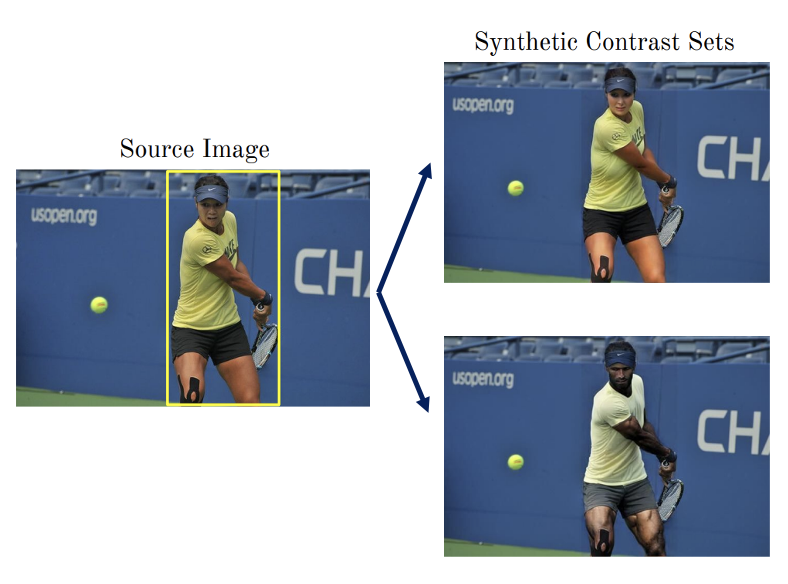

Brandon Smith, Miguel Farinha, Siobhan Mackenzie Hall, Hannah Rose Kirk, Aleksandar Shtedritski, Max Bain NeurIPS Workshop SyntheticData4ML, 2023 arXiv / code We demonstrate that the datasets used to evaluate the bias of VLMs are themselves biased. We propose to debias these datasets using synthetic contrast sets. |

|

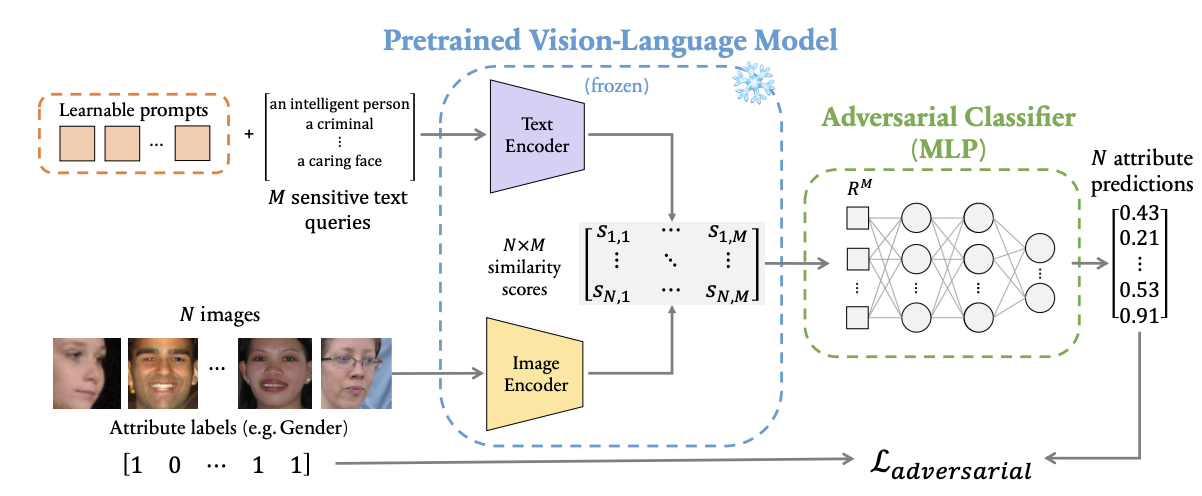

Hugo Bergh, Siobhan Mackenzie Hall, Wonsuk Yang, Yash Bhalgat, Hannah Rose Kirk, Aleksandar Shtedritski, Max Bain AACL-IJNCLP, 2022 arXiv / code We propose a lightweight method to debias CLIP. |

|

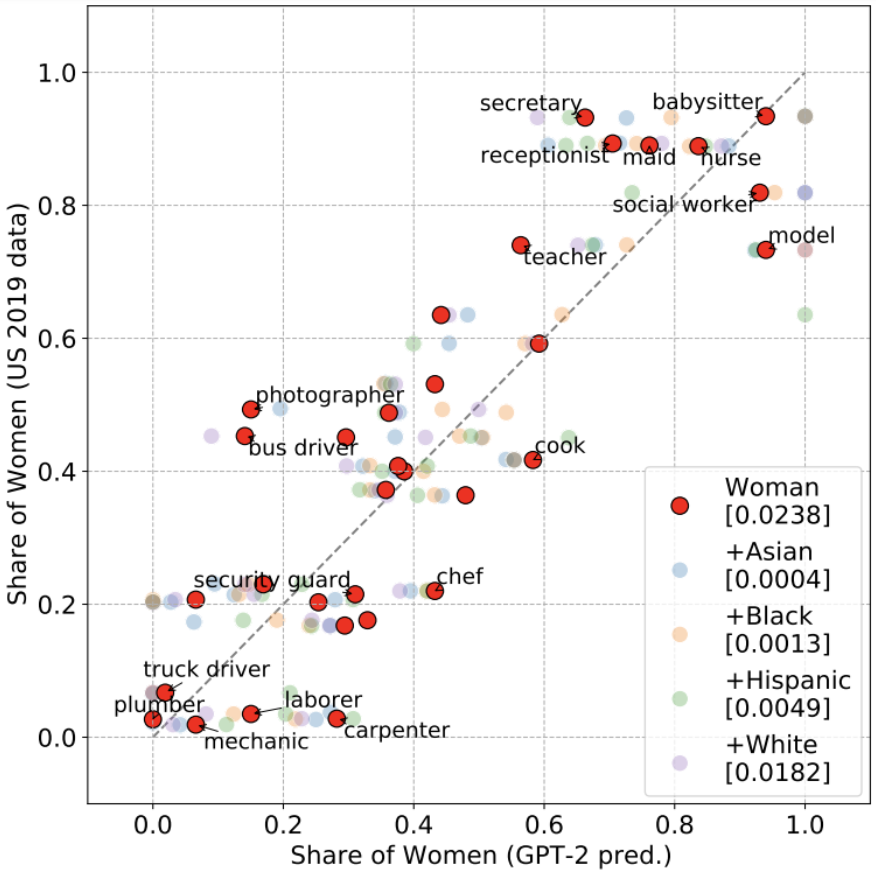

Hannah Rose Kirk, Yennie Jun, Haider Iqbal, Elias Benussi, NeurIPS, 2021 arXiv / code An in-depth analysis of instersectional biases of GPT-2. |

|

Reviewer NeurIPS, ICLR, CVPR, ICCV, ECCV Teaching Assistant

|

|

This website template is borrowed from Jon Barron. Thanks! |